Model Context Protocol (MCP): The Open Standard for AI Tool Integration

TL;DR

- What is MCP? The Model Context Protocol (MCP) is an open standard that creates a universal language for AI assistants and LLMs to securely connect with external tools, APIs, and data sources

- Why is it important? It replaces the need for custom, one-off integrations with a single, reusable protocol. This accelerates development, enhances AI agent autonomy, and simplifies maintenance

- How does it work? MCP uses a client-server architecture. An AI application (Host) uses a Client to connect to a lightweight Server, which acts as a secure bridge to an external tool or database

- Key Features: MCP defines standard ways to interact with Resources (data), Tools (executable functions), and Prompts (workflow templates), enabling rich, two-way communication

- MCP vs. Other Tools: MCP is a low-level communication protocol, not a high-level application framework like LangChain. They are complementary: an agent built with LangChain can use MCP to connect to tools without needing custom API code

- Security: MCP integrates the OAuth 2.0 standard for secure, standardized authentication, making it suitable for production and remote connections

Advanced Large Language Models (LLMs) often operate in isolation, limited by their training data and disconnected from live information. To perform meaningful tasks, they must connect to external data, APIs, and business software. This usually requires building complex, custom integrations for each service, a process that is difficult to scale and maintain.

The Model Context Protocol (MCP) is an open standard designed to solve this challenge. Released in late 2024, MCP provides a universal, secure, and two-way communication channel for AI applications to interact with external systems. It replaces fragmented, custom code with a single, standardized connection method, fundamentally changing how AI agents access and use tools.

Why is the Model Context Protocol (MCP) Important?

MCP offers clear benefits for developers creating sophisticated AI agents and automating complex workflows. It provides a more robust and scalable alternative to traditional integration methods.

- Accelerated Tool Integration: MCP enables developers to add new capabilities to AI applications with plug-and-play simplicity. If an MCP server exists for a service, any MCP-compatible AI app can immediately use its functions without custom code. This speeds up development, allowing an AI agent to query a database or make an API call just by connecting to the right server.

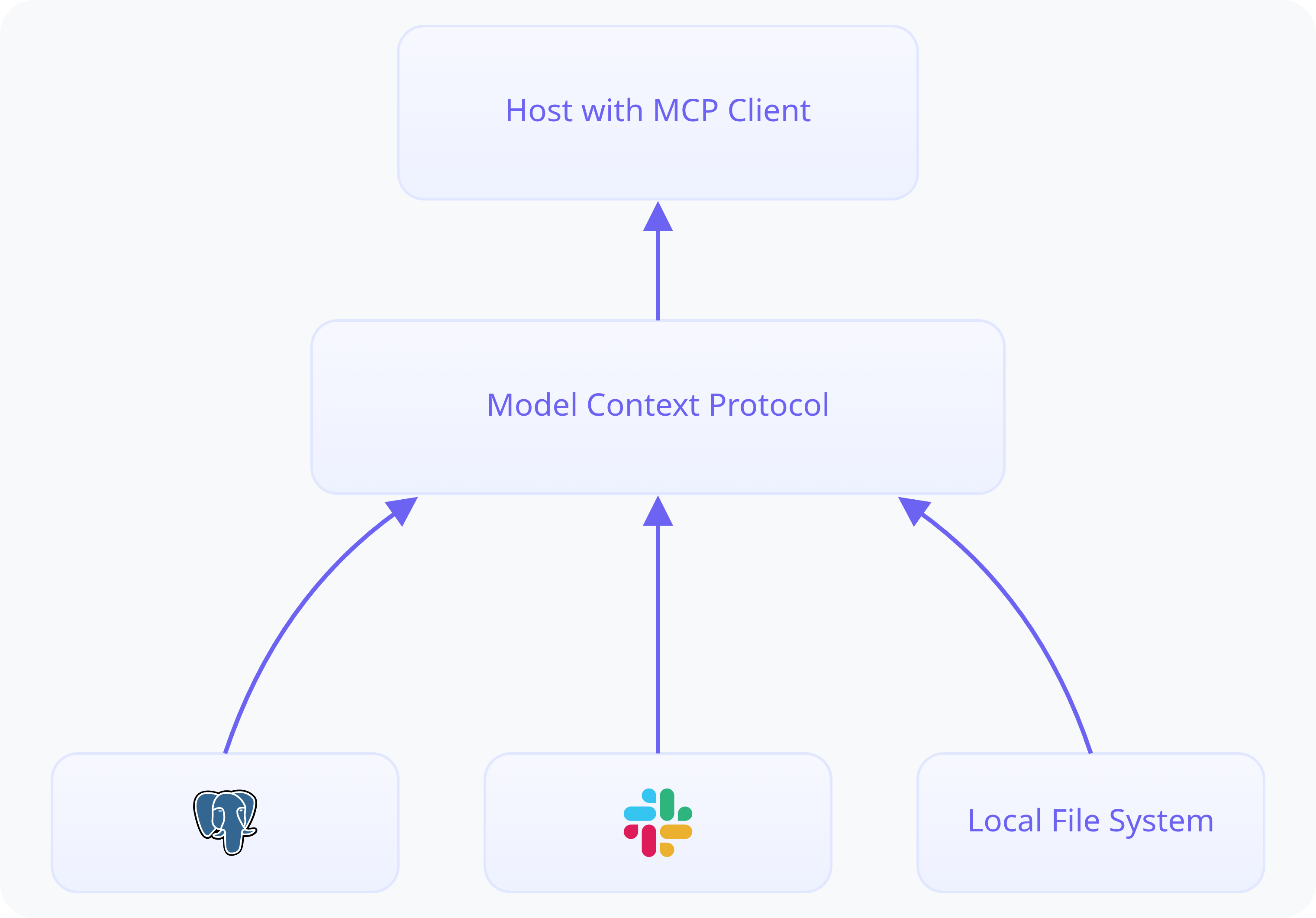

Model Context Protocol (MCP) Architecture - Enhanced AI Autonomy: By enabling direct interaction with external systems, MCP supports more autonomous AI agent behavior. An agent can actively retrieve information from a CRM, send an email, or query a database as part of a multi-step workflow. This moves beyond simple Q&A and toward true automated task completion

- Reduced Integration Complexity: As a universal interface, MCP significantly lowers the effort needed to integrate new APIs or data sources. An application only needs to support one protocol MCP to connect to countless services. This lets development teams focus on core application logic instead of building and maintaining repetitive integration code

- Improved Consistency and Interoperability: MCP uses a standardized request/response format based on JSON-RPC, which simplifies debugging and ensures future compatibility. The interface to external tools remains consistent even if the underlying LLM is swapped out, protecting your development investment

- Rich, Two-Way Contextual Interaction: Traditional tool use is often a one-way street: the AI calls a tool and gets a result. MCP creates a richer, two-way dialogue. A server can do more than just offer executable Tools; it can proactively provide Resources (like a file's content) to give the model context before it acts. Furthermore, it can offer Prompts, which are structured templates that guide the AI through a complex workflow. This creates a true partnership between the AI and its tools, enabling more sophisticated and reliable automation

Understanding the MCP Architecture

MCP operates on a flexible client-server model designed for security and extensibility.

- Hosts: The primary LLM applications that initiate MCP connections, such as an AI assistant like Claude Desktop or an IDE plugin

- Clients: Protocol clients that run inside the host application and manage connections to MCP servers. They are embedded directly into chatbots, coding assistants, or automation agents

- Servers: Lightweight programs that expose specific capabilities (Resources, Tools, Prompts) via the MCP standard. Servers act as secure bridges between the AI application and external systems

- Local Data Sources: Data stored on a user's machine, like files or local databases, that MCP servers can access with permission

- Remote Services: External systems accessible over a network, such as web APIs or cloud databases, that MCP servers connect to

In this setup, the AI application (Host) includes an MCP Client. Each external service connects via a dedicated MCP Server. The server lists its available capabilities, and the client connects to use them. The LLM never communicates directly with the external API, instead, all communication is structured and secured through the standardized MCP client-server process.

Key MCP Concepts Explained

MCP is based on several core ideas that structure how clients and servers interact

- Resources: Represent file-like data or content provided by a server. An LLM reads resources to gain context for a task. Access is controlled by the host application. Resources have unique URIs (e.g.,

file:///path/to/file,postgres://db/table) and can contain text or binary data. Servers can also notify clients when a resource changes - Tools: Executable functions offered by a server that allow an LLM to interact with external systems. Tool use is typically controlled by the AI model for automatic execution. Each tool includes a name, a description for the LLM, and an input schema (using JSON Schema)

- Prompts: Pre-defined templates or instructions provided by a server. Prompts help users or LLMs complete specific tasks more efficiently by guiding them through a workflow

- Roots: URIs suggested by a client to a server to define relevant locations for resources, such as a filesystem path or an HTTP URL. Servers should generally operate within the scope defined by these roots

- Transports: The underlying communication mechanisms that define how MCP messages are exchanged. MCP uses JSON-RPC 2.0 as its standard message format

How MCP Servers and Clients Talk: Transports and Messages

All communication in MCP uses a standard language: JSON-RPC 2.0. This means every message is one of four types: a Request asking for something, a Result returning the answer, an Error if something went wrong, or a Notification for one-way information.

But how these messages travel between the client (in the AI app) and the server (exposing the tool) is defined by a transport. MCP defines two standard transports for two different scenarios.

Transport 1: The Direct Line (Stdio Transport)

Think of this as the simplest, most direct connection possible. It's designed for when the client and server are running on the same machine, which is perfect for local development and testing.

- How it Works: The client application launches the MCP server as a subprocess. They then communicate directly using standard input (

stdin) and standard output (stdout). The client sends a request to the server'sstdin, and the server writes its response back tostdout. - Formatting: Each JSON-RPC message must be a single line of text

- Logging: The server can write debugging logs to standard error (

stderr), which the client can choose to display or ignore - Use Case: Ideal for building and debugging a new MCP server on your local computer before deploying it

Transport 2: The Network Connection (Streamable HTTP Transport)

When your AI application needs to connect to a server over a network, whether on a different machine in your infrastructure or a public service on the internet, you need a more robust transport.

This transport uses standard HTTP POST and GET requests, allowing a single server to handle connections from multiple clients. To enable the rich, two-way communication MCP promises (like progress updates or server-initiated messages), it cleverly uses Server-Sent Events (SSE) to create a persistent stream.

Here’s how it manages this more complex interaction:

- Connection & Session:

- The client sends an initial

POSTrequest to connect - The server responds with a unique

mcp-session-idin the HTTP header - The client must include this session ID in all future requests to maintain the conversation

- The client sends an initial

- Two-Way Communication: To receive messages from the server (like notifications), the client makes a separate

GETrequest. The server keeps this connection open, streaming messages back via SSE. This solves HTTP's traditional request-response limitation - Resilience: If the connection breaks, the server can assign an ID to each event. The client can use the

Last-Event-IDheader to tell the server where to resume, preventing lost messages - Security: For remote connections, security is critical. Implementers must validate the

Originheader and use proper authentication to prevent common web attacks. When running locally, the server should only bind tolocalhost(127.0.0.1) for safety - Configuration Flags: Two flags can alter this behavior for specific needs:

stateless_http=True: This mode removes the need for session IDs, which is essential for environments that require high availability and load balancing across multiple server instances (horizontal scaling). In a stateless setup, any server instance can handle any client request without needing shared session memory. However, this comes at a cost: the server gives up its ability to send unsolicited requests or notifications to the client, making the interaction strictly client-initiatedjson_response=True: This disables streaming forPOSTresponses, returning only a single, final JSON object. This is useful for simpler tools that don't need to send intermediate progress updates

Custom Transports

While Stdio and HTTP are standard, MCP is extensible. Teams can implement their own transports (e.g., using WebSockets or gRPC) as long as they adhere to the core JSON-RPC message structure and lifecycle.

Implementation Resources: SDKs and Examples

To simplify development, MCP provides Software Development Kits (SDKs) for popular programming languages:

You can find quickstart guides and examples for building both client and server applications in the official documentation.

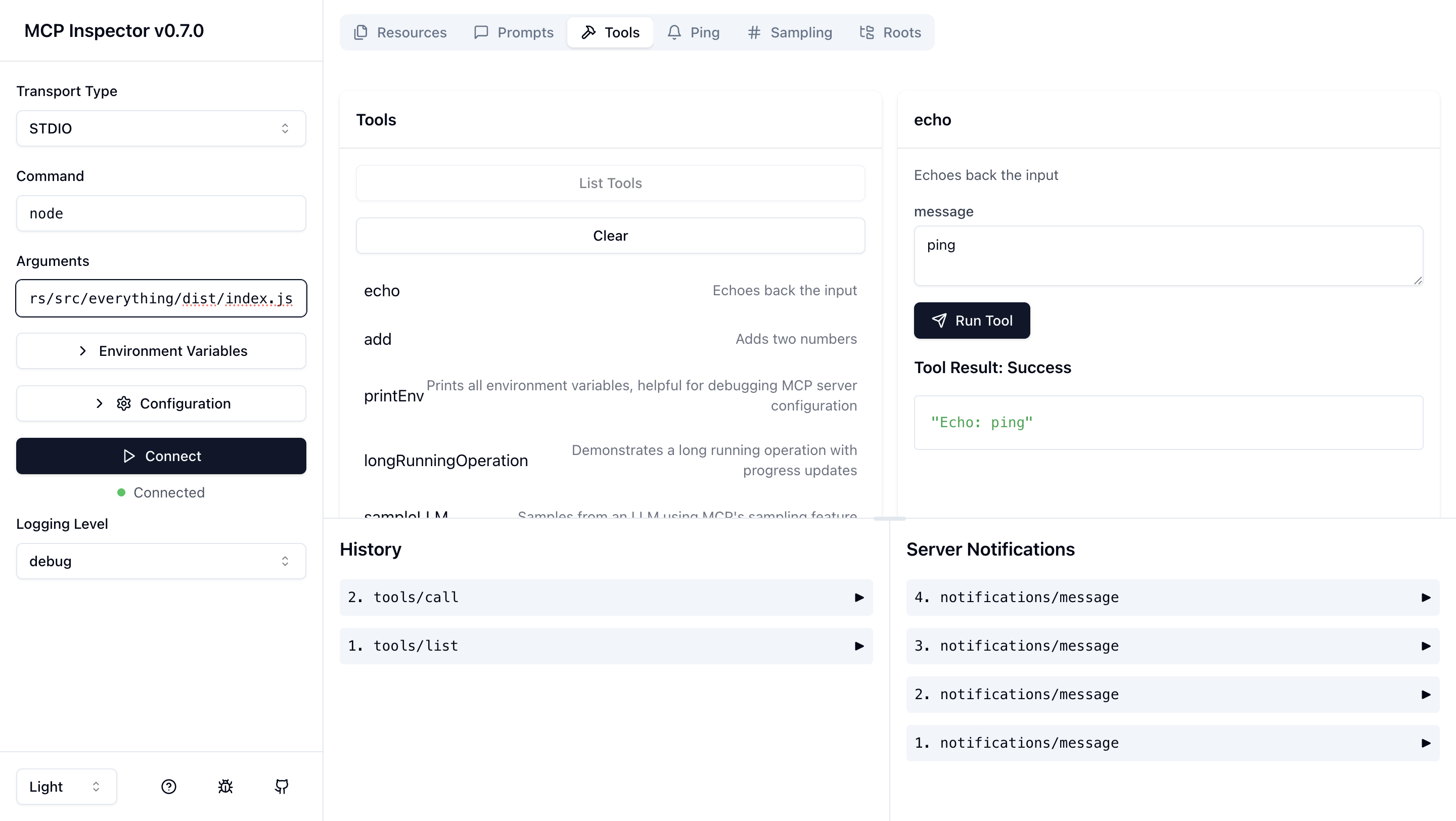

How to Debug and Troubleshoot MCP Integrations

Effective debugging is essential when working with MCP. The ecosystem provides tools and guidance:

- MCP Inspector: An interactive tool built specifically for debugging MCP servers. It provides a detailed, real-time view of messages exchanged between a client and server, making it easier to identify issues.

The MCP Inspector Tool

Secure Authentication with OAuth 2.0

A key improvement in MCP is adopting OAuth 2.0 for secure authentication. Earlier versions lacked a standard method, making secure remote connections difficult and often requiring manual credential handling.

Using OAuth 2.0 provides a reliable and standard framework for secure interactions, particularly for remote servers and multi-user applications. Key advantages are:

- Standardized Security: Uses the established OAuth 2.0 protocol.

- Dynamic Client Registration (DCR): Allows clients to register with authorization servers automatically.

- Automatic Endpoint Discovery: Simplifies setup by allowing clients to find necessary OAuth endpoints.

- Secure Token Management: Ensures safe handling of access tokens, limiting client access to authorized resources only.

This standardized approach significantly improves security and ease of use.

How MCP Compares to Other Integration Approaches

MCP offers a distinct approach compared to other ways of connecting LLMs to external systems:

-

Custom Integrations: MCP removes the need for building fragile, labor-intensive, single-use integrations for each service by providing a reusable, standard protocol.

-

ChatGPT Plugins: While an early step, ChatGPT plugins were proprietary, linked to OpenAI, focused on single interactions, and lacked a universal authentication standard. MCP is an open, model-neutral standard supporting persistent connections and standard OAuth 2.0 security.

-

LLM Tool Frameworks (e.g., LangChain, LlamaIndex): It's best to think of these as operating at different levels of abstraction.

- LangChain and LlamaIndex are high-level application development frameworks. They provide the scaffolding to build an agent's internal logic—its memory, planning capabilities, and decision-making loops.

- MCP is a low-level communication protocol. It standardizes the "last mile" connection between the agent and the external tool itself.

They are not competitors; they are complementary. Think of it this way:

Scenario: Building a GitHub Issue Manager Agent with LangChain

-

Without MCP: Your LangChain agent needs a tool to fetch issues. You would write custom Python code, handles authentication with a personal access token, makes the specific API call to get the issues, and then parses the JSON response. If you later want to add a tool for Slack, you repeat the process for Slack's API.

-

With MCP: Your LangChain agent needs the same tool. Instead of writing custom code, it makes a standardized call to the Github MCP server. The agent's logic doesn't need to know anything about REST APIs, Python libraries, or token authentication. The MCP server handles all the specific implementation details.

MCP standardizes the connection interface, allowing high-level frameworks like LangChain to focus on the agent's internal logic and behavior.

Real-World MCP Applications and Community Engagement

MCP allows AI systems to securely access and utilize live data and perform actions. Developers are already building MCP servers for common tools:

- Brave Search

- Slack

- GitHub

- Databases (PostgreSQL, Redis)

- File systems

These examples show MCP's value in connecting AI agents to company data, developer tools, communication platforms, and essential business systems.

Frequently Asked Questions (FAQ)

What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open-source standard designed to create a universal communication layer between AI models (like LLMs) and external systems. It replaces the need for custom, one-off integrations with a single, reusable protocol, allowing AI agents to securely access data, APIs, and other tools

Why is MCP important for AI development?

MCP is important because it accelerates development, enhances AI agent autonomy, and reduces integration complexity. By standardizing how AI connects to tools, developers can focus on core application logic instead of writing repetitive integration code. This leads to more powerful, scalable, and maintainable AI applications

How does MCP work?

MCP uses a client-server architecture. An AI application (the Host) contains an MCP Client that connects to a lightweight MCP Server. The Server acts as a secure bridge to an external tool or data source (like a database or API). All communication follows the standardized MCP format, ensuring secure and structured interactions

How does MCP compare to LangChain or ChatGPT Plugins?

MCP is complementary to frameworks like LangChain and a more open alternative to proprietary systems like ChatGPT Plugins. LangChain is a high-level framework for building an agent's internal logic, while MCP is a low-level protocol for connecting that agent to external tools. An agent built with LangChain can use MCP to communicate with any MCP-compliant tool without needing custom API code

What are MCP Transports?

MCP Transports are the underlying communication mechanisms for exchanging messages. The two standard transports are Stdio, used for local development where the client and server are on the same machine, and Streamable HTTP, which allows for remote connections over the internet and supports advanced features like streaming with Server-Sent Events (SSE)

📄️ Server Features

Explore the core server features of the Model Context Protocol (MCP), with Python examples for implementing Prompts, Resources, Tools, Logging, and more.